Kian Masters is a Senior Associate and Li Zhang is Portfolio Manager and Executive Director in the Global Balanced Risk Control team at Morgan Stanley Investment Management (MSIM). This post is based on an MSIM memorandum by Mr. Masters, Ms Zhang, Andrew Harmstone, Managing Director and Senior Portfolio Manager and Christian Goldsmith, Managing Director, at Morgan Stanley Investment Management.

Related research from the Program on Corporate Governance includes The Illusory Promise of Stakeholder Governance by Lucian A. Bebchuk and Roberto Tallarita (discussed on the Forum here); Does Enlightened Shareholder Value add Value (discussed on the Forum here) and Stakeholder Capitalism in the Time of COVID (discussed on the Forum here), both by Lucian A. Bebchuk, Kobi Kastiel and Roberto Tallarita; and Restoration: The Role Stakeholder Governance Must Play in Recreating a Fair and Sustainable American Economy – A Reply to Professor Rock by Leo E. Strine, Jr. (discussed on the Forum here).

At a Glance

- Big Tech has grown incredibly large, fueled by the unprecedented collection, processing and analysis of digital data.

- Yet this growth has conflicted with users’ interests, particularly in areas related to user privacy, and misinformation. This may raise questions about the sustainability of Big Tech’s growth models.

- At the same time, these companies’ concentrated market power also raises questions about

potential antitrust action. - Corporate governance structures appear inadequate for guiding more effective self-regulation, which raises regulatory risks at a time when technology is being buffeted by macro headwinds.

- In our view, shining a sustainability light on the dark side of Big Tech can yield important insights into risks that traditional (non-ESG) approaches may under-appreciate.

Introduction

In today’s digital economy, data is the key resource underpinning economic value creation. Data is crucial to the development of most online services and is indispensable to the development of emerging technologies such as artificial intelligence and machine learning. Capturing and analysing data is therefore central to the business models of some of the most successful companies in today’s economy. However, as with the overuse of natural resources, the pervasive collection of data, particularly personal data, has negative externalities that cannot be ignored.

In this paper, we focus on the “Big Tech” companies that dominate the new data economy. We discuss the potential social consequences associated with digital data mining and assess whether these issues might become a headwind for these data-driven companies, which are increasingly in the shadow of the regulator.

By “Big Tech,” we are primarily referring to mega-cap technology companies headquartered in the U.S. [1] While not homogeneous, we find it analytically useful to bundle these companies together, given their societal omnipresence and market power.

The Rise and Rise of Big Tech

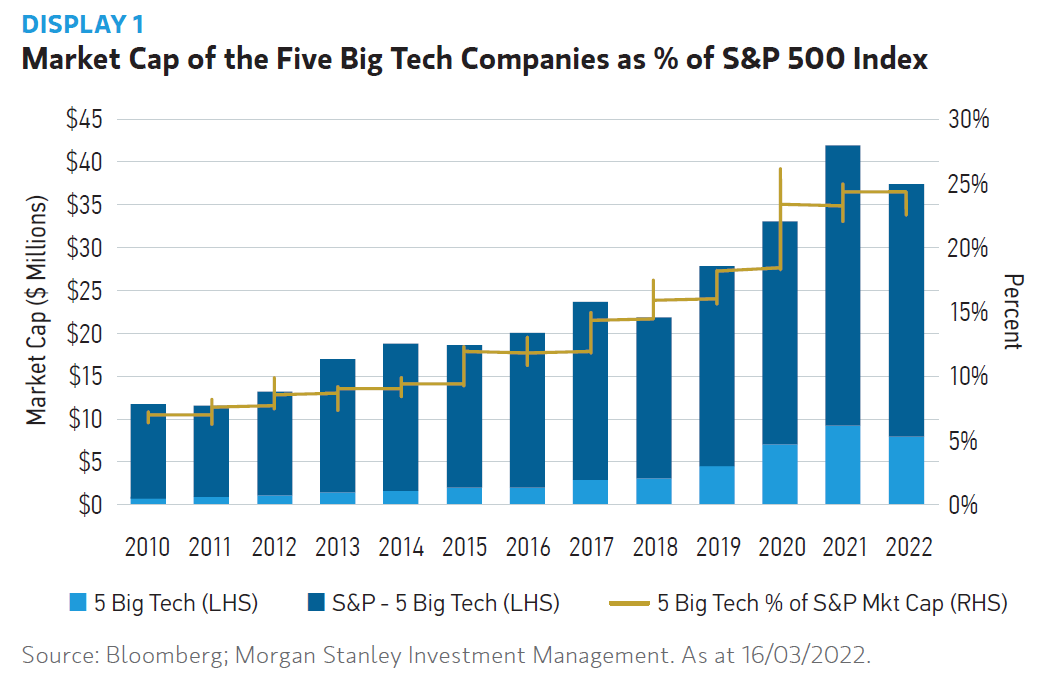

Notwithstanding recent market volatility, Big Tech firms have grown to be among the largest public companies in the world. As a result, U.S. large-cap capitalization-weighted indices such as the S&P 500 are now much less diversified. In fact, just five companies in our “Big Tech” category make up almost 23% of the S&P 500, with the two largest constituents’ combined weight (13.0%) now larger than that of the Real Estate, Energy, Materials and Utilities and sectors combined (11.5%).

Big Tech is the Internet

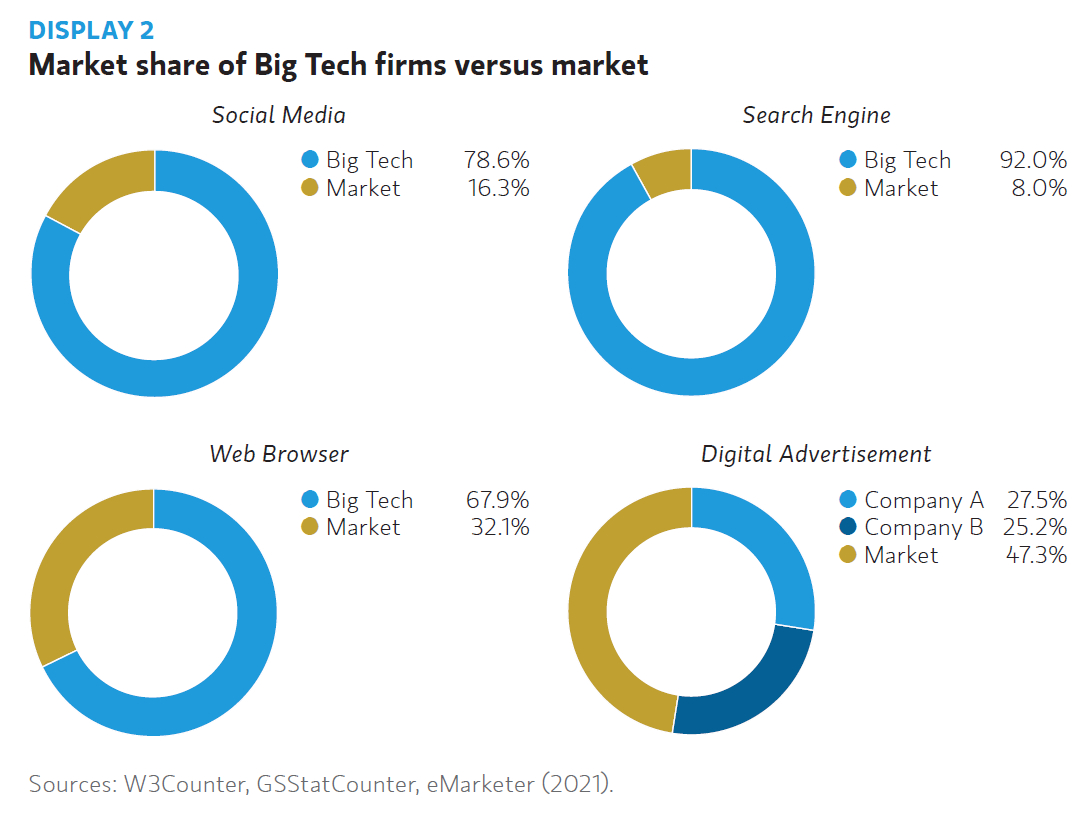

Big Tech firms typically provide access to digital platforms, which connect buyers and sellers or individual users. Often, these platforms’ strong network effects benefit from economies of scale, which reinforce a potential “winner takes all” dynamic. Put simply, the more a product is used, the more useful it becomes. Consider the example of online social networks, which are more useful for individuals if everyone they know is on the same platforms.

Data facilitates these network effects, with the firm’s product usefulness enhanced by either using revenues derived from user data or by exploiting insights derived from the collected user interaction data. This, in turn, attracts more users and drives more revenue. These same network effects and economies of scale may explain concentration in some segments of the digital economy.

Growth vs User Protection?

Big Tech’s thirst for growth is leading toward an inevitable conflict between their interests and those of users—conflict that regularly manifests as risks under the social pillar of ESG. We believe that investors who fully appreciate these social risks may benefit, particularly as these conflicts materialise and attract the attention of regulators in key markets.

For example, the more personal data a platform harvests and stores, the more the platform’s operator will be exposed to risks around privacy concerns. Additionally, the addictive nature of many platforms also raises the potential for societal problems such as damage to users’ mental health, misinformation and even extremism. These issues may pose a risk to companies’ value creation by limiting their growth prospects, either through the migration of users or advertisers or, increasingly, through regulatory and competition initiatives.

Social Responsibility

The Algorithm Decides

For Big Tech companies that rely on data harvesting, engagement is key to growth. Big Tech platforms can capture more valuable data by keeping user attention fixed for longer. The drive to maximize data collection and therefore value has resulted in systems optimized to capture and retain users’ attention, with little regard for consequences.

Content is a case in point. Material that elicits a strong emotional reaction has proven highly effective in capturing user attention and prolonging engagement. It is little wonder that the pursuit to optimize engagement can lead to algorithms designed to amplify and promote sensational and divisive content. [2]

Consider, too, that algorithms curate content based on a user’s unique interests. Such personalization limits exposure to outside views in a process that can create view-distorting “filter bubbles.” Roger McNamee, the renowned early Big Tech investor turned activist, claims that filtering results in social polarization, rising mental health issues, hate speech and even violence. [3]

On the one hand, this combination can prove fertile ground for the spread of disinformation. For example, disinformation related to Covid-19 and vaccines has proliferated across social media, resulting in vaccine hesitancy among some populations. [4] In 2019, this led the World Health Organization to add vaccine hesitancy to the list of the top 10 threats to global health. [5]

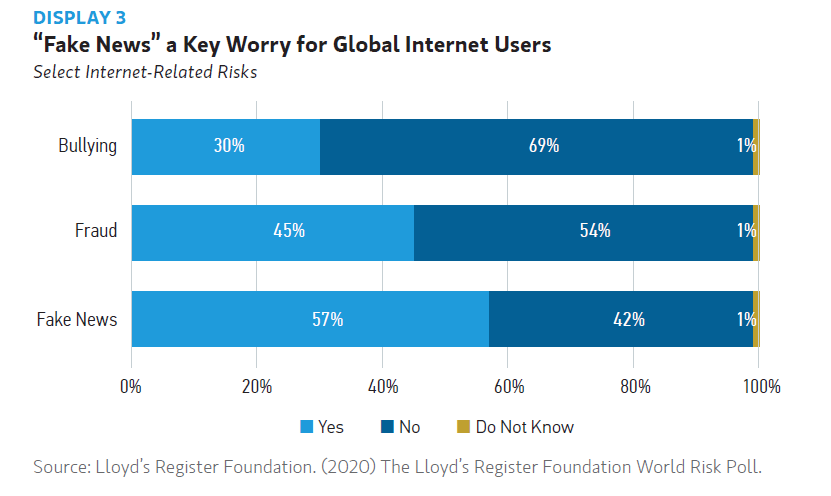

On the other hand, global society is not oblivious to the dangers of indiscriminate algorithmic preferencing. A recent worldwide poll conducted by Lloyds Register Foundation and Gallup, of 150,000 people in 142 countries, found that internet users view “fake news” as their major concern. [6] Ultimately, this suggests that users may push back. Platforms that fail to address the scourge of “fake news” may see users, and their valuable data, migrate elsewhere.

Self-Regulation—A Solution?

While algorithms may lack the value systems of humans, they reflect the biases of their creators. The proliferation of algorithms in sorting and selecting content places a responsibility on the creators to ensure they are trained on a sufficiently diverse data set. Failure to self-regulate could impose burdens on companies such as increased transparency or limiting of use of algorithmic prioritizing, such as those proposed by U.S. House Lawmakers in the Filter Bubble Transparency Act.

To date, tech companies have been slow to address this problem, save in extreme cases. This is due in part to a piece of U.S. legislation enacted in the 90s, Section 230 of the Communications Decency Act, which grants wide immunity against liability for harmful content posted on platforms.

However, support has emerged to amend Section 230 and the changes could have profound effects. Each internet platform company operating in the U.S. has explicitly identified Section 230 amendments as a business risk. Even if exposed companies manage to avoid costly sanctions through self-regulation, they would likely be forced to invest significantly into content-management initiatives, particularly as their presence grows in emerging markets, which have varying cultural and linguistic requirements. As operating expenses in technology can be high, even before these added outlays, additional expenses to monitor content may surprise to the downside. Additionally, if users feel like their free speech rights are being infringed upon by overzealous moderation, they may seek alternative, more laissez-faire platforms. This highlights the delicate balancing act today’s virtual public squares must strike between free speech and content moderation.

Privacy Paradox

As more activities move online, data privacy and protection have become paramount. Data privacy is centered around how data is collected, processed, stored and shared with third parties. Handling troves of digital personal data leaves Big Tech companies highly exposed to concerns around privacy. However, do users care about privacy? The evidence is mixed.

Users voluntarily sign lengthy and complex legal terms and services (T&Cs) documents detailing the use of their personal data by the platform and their partners for commercial purposes. A study of 2,000 consumers in the U.S. found that 91% consent to legal T&Cs without reading them, [7] a phenomenon known as “click wrap”. Thus, it is likely users do not fully comprehend the volume of data that is gathered on them.

In fact, studies show that users do care about the scale and intensity of data harvesting when this is disclosed to them. [8] Given the frequent controversies around data use, we believe users may become more discerning. While they are typically happy to share data in exchange for a useful, free, or personalized product, they may be more likely to withhold permission when it is for services they do not highly value, such as targeted advertising.

A 2021 UBS survey of circa 2,000 Americans found that 42% of respondents have already updated their privacy settings on a social media site to be more restrictive and 22% plan to. Notably, the rate of teenage users planning to update their privacy settings has risen consistently, indicating that younger cohorts may find the privacy/ personalization trade-off as less valuable than older generations. [9]

Given some Big Tech companies heavy reliance on data informed advertising revenue (95% plus of 2021 revenues for some of the largest companies in our set), there is a strong economic motivation to extract as much user data as possible. Clearly, this impulse runs headfirst into the privacy interests of users and, increasingly, of regulators.

ESG Sharpens the Oversight Spotlight

These social issues come against the backdrop of ongoing global debate about whether Big Tech’s market power and business practices have become disadvantageous to consumer welfare, competition, and productivity. As it is, Technology is lightly regulated in comparison to other sectors. We believe this is for a variety of reasons. The internet industry is comparatively young and has come to prominence in an era during which American public discourse has opposed industrial regulation as burdensome, innovation limiting instruments.

However, growing scrutiny into the business practices of today’s tech titans is seeing bipartisan support emerge in key markets such as the U.S. and Europe to curb their influence. As the negative externalities associated with Big Tech become more widely recognized, the end of the “light touch” internet regulatory regime may be near.

Privacy Laws Limit The Flow Of Data

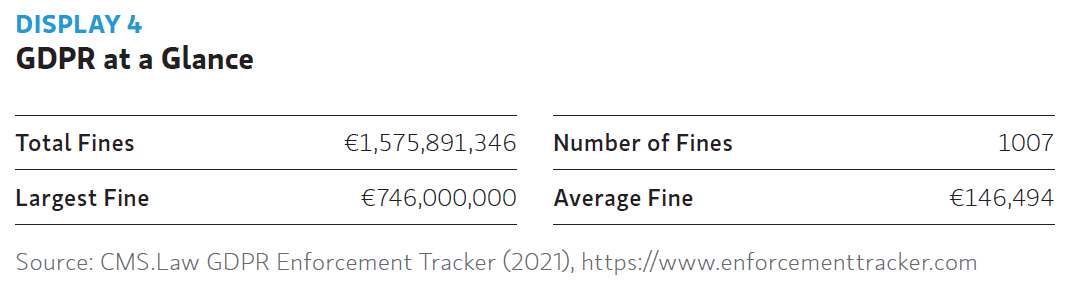

Regulating data sharing via privacy laws is one way to address these concerns. According to the United Nations Conference on Trade and Development, 137 out of 194 countries have passed legislation designed to protect data and privacy. The EU’s General Data Protection Regulation (GDPR) statute, passed in 2016, is among the most comprehensive data privacy laws passed. Among other things, GDPR requires an individual’s consent when using their personal data, thereby giving individuals greater protection and control. Due to its extraterritorial reach, companies based outside the EU can fall in the scope of GDPR if they collect and process an EU citizen’s personal data.

Non-compliance with GDPR can result in heavy penalties and reputational damage, including eyewatering fines of up to either €20million or up to 4% of annual global revenue. It should be noted that interpretation of GDPR varies within Europe and while fines have generally been increasing, regulators have shown restraint. No company has been hit with the headline 4% fine yet and few expect that to change.

Recent court rulings in Europe continue to make data transfer outside the EU incredibly complex for tech companies operating in the U.S. The Court of Justice of the EU in its Schrems II judgment declared that companies who intend to transfer personal data outside the EU must ensure that GDPR-equivalent protection is provided, or they must suspend the transfer.

We expect that the evolution of data protection regulations globally will continue to force changes to company practices, slowing their growth through loss of data, lower efficacy and poorer quality of content. Continued concerns around data collection and the slow rate of change by companies means that a worst-case scenario such as being locked out of markets, while unlikely, should not be entirely dismissed. Investors should be mindful therefore of the fundamental risk that privacy regulations impose.

U.S. Antitrust Growing FAANGS?

Experts believe that Big Tech’s stewardship over large pools of user data confers substantial advantages. They point to the feedback loop conferred on Big Tech through its network effects described earlier. The OECD notes that “the dominant platform may not do anything that can be properly qualified as anti- competitive, and yet the feedback loop can reinforce dominance and prevent rival platforms from gaining customers.”

Consequently, many argue that today’s Big Tech companies exert monopoly power that can block competitors and disadvantage users in ways that would not have been permissible for most of the 20th century. Europe once again leads the charge against Big Tech on this front. The EU’s proposed Digital Markets Act seeks to blacklist certain anticompetitive business practices that allegedly have allowed Big Tech to exploit their size and entrench their position as “gatekeepers”. The Act is expected to come in to force at the end of 2022 once approved by both the European Parliament and Council.

The U.S. has taken some steps towards addressing Big Tech’s power. We first discussed this in our July 2021 Insight [10] where we identified Lina Khan’s appointment to chair the U.S. competition regulator as well as a raft of bills introduced in the U.S. to amend anti-trust law to make enforcement easier.

However, despite Europe’s proposals and U.S. tentative steps to curb Big Tech’s power, the limited antitrust risk premium built into these firms’ valuations suggests that few believe today’s tech titans will be brought to heel. On a longer-term basis, we are less sanguine than the market. For instance, over the next 3-4 years, we believe that antitrust developments could hurt the growth prospects for Big Tech companies, particularly when it comes to M&A, which is likely to face intense scrutiny on both sides of the Atlantic.

Governance Growing Pains

Taken together, the recent strengthening of antitrust and privacy enforcement has grown out of these companies’ perceived failure to self-regulate. The failure to self-regulate may reflect a culture where leadership often goes unchallenged. Indeed, decision-making power is often heavily concentrated, with founders exerting significant influence on strategy, be it through force of personality or corporate shareholding structures.

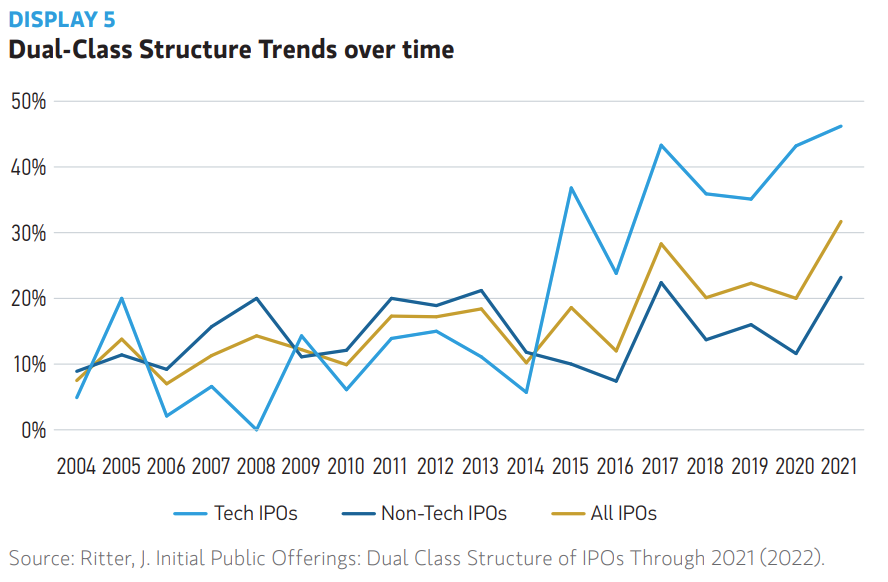

Dual class stock, which is prevalent in tech, entitles the owner(s) of certain shares to exercise voting rights at levels that exceed their claim on cash-flow rights. Some believe that this allows a founder or founder group to focus on a more beneficial long-term strategy, rather than being constrained by short-term considerations.

Consequently, investors will have to grapple with certain trade-offs. They must assess not only the nature of the shareholdings, but also the nature of the company, industry and controller. And while we agree that simplified control structures may be beneficial in a company’s early growth stages, they place enormous power in the hands of leadership and the select few employees to whom leaders delegate key responsibilities.

Given the size and complexity of Big Tech organizations we believe that this concentrated power in should be recognized as a risk. This is exacerbated by the increasing frequency with which these companies find themselves in the regulatory spotlight. Structuring decision-making processes to move away from the narrow views of the few, to incorporate more diverse perspectives, could help to mitigate some of these regulatory and reputational risks.

The Investment Outlook for Big Tech

While Big Tech has enjoyed a relatively unhindered rise to prominence, the headwinds are getting stronger. In the near term, the macro backdrop of slowing growth, ongoing inflationary pressure and subsequent rising nominal and real rates means that we generally expect developed market growth stocks like Big Tech to underperform.

Over the next couple of years we expect that a more challenging operating environment, in the shadow of regulators and competition authorities on both sides of the Atlantic, may limit Big Tech’s growth potential upon which its valuations depend.

As outlined throughout this piece, many of the biggest risks facing Big Tech are related to the “S” pillar within ESG. Issues around privacy and social responsibility may not be considered material for a traditional investor, however, an approach that fully integrates ESG could help gain a more thorough understanding of what drives the risks for Big Tech. Given that these companies are the largest constituents in major equity benchmarks, such as the S&P 500, both active and index investors should consider the potential impact of these ESG risks.

The complete publication, including footnotes, is available here.

Endnotes

1However, a number of relatively small companies that exhibit similar business practices focused on data collection share the same ESG risks we touch on(go back)

2Cobbe, J ., & Singh, J . ( 2019). Regulating Recommending: Motivations, Considerations, and Principles. European Journal of Law and Technology, 10 (3)(go back)

3McNamee, R. (2019). Zucked. PenguinRandomHouse.(go back)

4Rutschman AS. Social Media Self-Regulation and the Rise of Vaccine Misinformation. SSRN; 2021.(go back)

5World Health Org., Ten Threats to Global Health in 2019 (2019).(go back)

6Lloyd’s Register Foundation. (2020) The Lloyd’s Register Foundation World Risk Poll.(go back)

7Deloitte, 2017 Global Mobile Consumer Survey: US edition. (2017)(go back)

8Pew Research Center. “Facebook Algorithms and Personal Data”. 2019(go back)

9UBS. Assessing the Consumer Usage & Ad Engagement Landscape (14th Ed.). 2021(go back)

10Morgan Stanley Investment Management. Fed’s hawkish surprise, yet markets unruffled (2021)(go back)

Print

Print