Alex Kim is a PhD Student, Maximilian Muhn is an Assistant Professor of Accounting, and Valeri Nikolaev is James H. Lorie Professor of Accounting and FMC Faculty Scholar at University of Chicago, Booth School of Business. This post is based on their recent paper.

In an era marked by political turbulence, climatic unpredictability, and rapid technological transformations, corporations are subject to multi-dimensional risks. As these risks have become more and more complex and important over time, they have profound implications for sustainable growth and stakeholder value. Therefore, in our latest study “From Transcripts to Insights: Uncovering Corporate Risks Using Generative AI”, we examine the potential of Generative AI technologies, specifically large language models (LLMs) such as ChatGPT, to assess corporate risks from firm disclosures, assisting stakeholders in making well-informed decisions amidst rising uncertainty.

Recent studies develop textual measures of corporate risks generally by calculating dictionary-based bigram frequencies. This methodology relies on the coexistence of risk-related terminologies within pre-constructed lexical dictionaries. For instance, bigram-based search algorithm recognizes instances where “economic policy” is cited alongside the term “risk”. Such literature has undeniably enhanced our comprehension of corporate risks. Yet, what we find in our study is that AI can offer deeper, more sophisticated insights into textual data, providing a layered understanding of diverse corporate risks.

Conceptually, generative language models, such as ChatGPT, offer two pivotal advantages in risk analysis. First, their expansive knowledge enables a comprehensive understanding of a given text. These models, which have been trained on extensive data, are capable of generating insights combining knowledge from a myriad of related documents. This “general AI” aspect is particularly promising, as risks are not always explicitly discussed in corporate disclosures. Second, these models not only quantify but also provide the “rationale” of the produced risk metrics through coherent narratives, generating “humanly-readable” interpretation and evaluation of risk information.

Even though LLMs have great potential in evaluating corporate risks, the efficacy of these models in assessing firm-specific risks is not well understood yet. To address this question, we utilize OpenAI’s GPT3.5-Turbo LLM to construe risk exposure metrics at the firm level. Using earnings conference call transcripts from January 2018 to March 2023, we examine whether GPT-based risk indices can predict stock market volatility and associated economic outcomes.

This study focuses on the evaluation of major corporate risks impacting firms’ stakeholders, namely: political risk, climate-related risk, and AI-related risk. The novelty of AI risk is of particular interest as it allows the exploration of language models’ capability to gauge emergent risks.

We generate two principal outputs for these risk areas: (1) Risk Summaries, for which we direct the GPT model to provide summaries strictly based on the document’s content. (2) Risk Assessments for which we instruct the GPT model to incorporate its vast general knowledge when interpreting the actual document, producing a comprehensive judgment on the given risk. To quantify these risk exposures, we utilize a straightforward metric: the ratio of the length of the risk summary or assessment to the full length of the document. A higher ratio indicates greater risk exposure.

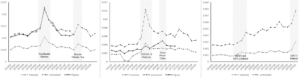

Our ChatGPT-based metrics show intuitive industry-specific trends: the tobacco sector faces the most political risk, coal mining the most climate risk, and business services the most AI risk. As seen in the following figures, our risks exhibit intuitive time trends as well. Nevertheless, firm-specific components account for the majority of the variance in risk exposures across sectors (e.g., about 90% of the variance in political risk is firm specific). This finding emphasizes the importance for stakeholders to conduct thorough evaluations of individual firm-level exposures to any given risk.

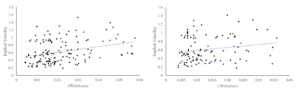

We then examine whether these GPT-based proxies are effective predictors of firms’ future stock price volatility. Our results show a strong positive correlation between GPT-based risk exposure measures and stock price volatility, particularly for political and climate risks (see figures below). Furthermore, GPT-generated risk assessments generally outperform (and in many cases even fully subsume) traditional bigram-based measures, highlighting the value of generative AI in providing insights about corporate risks. Remarkably, these results remain equally strong even when scrutinizing data after ChatGPT’s training phase cutoff in September 2021. Additionally, during this more recent time period, risks related to AI technology have begun to show significant correlations with stock market volatility in the latest years.

Equipped with these (Chat)GPT-based measures, we also assess firms’ reactions to being exposed to these risks. Consistent with economic theory, we find that companies generally decrease their investments when being more exposed. Moreover, companies increase their lobbying activities to address political risks, file green patents in response to climate risks, and AI-related patents for AI risks.

We then examine the relative importance of AI-generated risks. Over our study period, the relative significance of political and climate risks fluctuates, especially with events like the Covid pandemic. However, the importance of AI risks has been on a steady incline. Furthermore, in our final asset pricing tests, we show that investors require a higher risk premium for both environmental and AI risks.

Taken together, our study highlights the economic value of AI-powered language models in risk assessment. Although recent advancements suggest that this generation of Large Language Models could revolutionize information processing in capital markets (e.g., via summarizing complex corporate disclosures as explored in one earlier study of ours), their economic viability for risk assessment had remained unexplored. Our findings show their usefulness in assessing intricate risk information from narrative disclosures.

Moreover, we build upon earlier studies that leveraged corporate disclosures to gauge firm-level risk exposure by presenting a state-of-the-art AI-powered approach. In contrast to conventional bigram dictionaries, generative LLMs comprehend the more profound contexts underlying risks, thereby yielding richer insights.

In conclusion, we affirm the importance of general AI in unraveling complex topics. LLMs employ their broad-based knowledge to generate insights into corporate risks, often surpassing information that can be learned in isolation from the processed document. The AI’s proficiency in assessing risks through its extensive domain knowledge provides evaluations that are notably more comprehensive than those derived from localized, knowledge-based summaries.

Print

Print